Friday, March 29, 2019

Sunday, March 10, 2019

Monday, March 04, 2019

Pro-Republican Georgia election links

https://isgb.blogspot.com/2020/11/joe-biden.html

https://isgb.blogspot.com/2020/11/kamala-harris.html

Found off a wiki thats not wikipedia. All the far-left information available. Vote Republican to keep 2 party system

Rev. Dr. Raphael Gamaliel Warnock has been the senior pastor of Ebenezer Baptist Church in Atlanta since 2005. He is running for the U.S. Senate in Georgia.

Raphael Warnock was born in Savannah, Ga., "the 11th of 12 children born to two Pentecostal-Holiness ministers." Raphael Warnock served as a "youth pastor for six years and assistant pastor for four years" at the Abyssinian Baptist Church of New York.

Bio

Raphael Warnock has served, since 2005, as the Senior Pastor of the historic Ebenezer Baptist Church, spiritual home of The Rev. Dr. Martin Luther King, Jr. The son of two Pentecostal pastors, Dr. Warnock responded to the call to ministry at a very early age, and became, at age 35, the fifth and the youngest person ever called to the senior pastorate of Ebenezer Church, founded in 1886. Yet, before coming to Ebenezer, “America’s Freedom Church,” Dr. Warnock was blessed to study and serve within the pastoral ranks of leading congregations also known for their deep spiritual roots and strong public witness. He began as an intern at the Sixth Avenue Baptist Church of Birmingham, Alabama, where he was ordained by the Rev. Dr. John T. Porter, who himself had served many years earlier as Martin Luther King, Jr.’s pulpit assistant at Dexter Avenue Baptist Church in Montgomery, Alabama and later under the tutelage of Martin Luther King, Sr. at Ebenezer. From there, he served for six years as the Youth Pastor and four years as Assistant Pastor at the historic Abyssinian Baptist Church of New York City – also one of the nation’s leading congregations. Finally, before taking the helm of Ebenezer, Pastor Warnock immersed himself further in the challenges of urban ministry, in the 21st century, while serving for 4 ½ years as the Senior Pastor of Baltimore’s Douglas Memorial Community Church, also a spiritual base of social activism.

Under Pastor Warnock’s leadership, more than 4,000 new members have joined Ebenezer, enhancing the Church’s legacy of social activism with both spiritual and numerical growth. Several new ministries have been launched, including Worship on Wednesdays (WOW), EbenezerFest, Cutting Through Crisis, Faith & Fitness, Jericho Lounge, Young Adult Ministry and After Midnight (A Watch Night Worship Celebration). The Church’s income has continued to grow, even during the Great Recession, making possible over $5 million in capital improvements to the Educational Building and the Horizon Sanctuary, including HVAC systems, upgrades to the roof and enhancements in sound and lighting systems. Additionally, our pastor led us in a successful fundraising campaign to build the $8.5 million Martin Luther King, Sr. Community Resources Complex. The brand new building houses the Church’s administrative offices, the fellowship hall, classrooms, meeting rooms, a Cyber Café and an MLK Collaborative of four nonprofit partners, including Operation HOPE, Casey Family Programs, The Center for Working Families and nsoro Educational Foundation, all engaged together in helping individuals and families to improve their own life outcomes and live healthier and more prosperous lives.

Rev. Warnock is married to Mrs. Ouleye Ndoye Warnock.[1]

Education

The Rev. Dr. Warnock graduated from Morehouse College cum laude in 1991, receiving the B.A. degree in psychology. He also holds a Master of Divinity (M.Div.) degree from Union Theological Seminary, New York City, from which he graduated with honors and distinctions. Seeing his pastoral work as tied to the ministry of scholarship and the life of the mind, Rev. Warnock continued his graduate studies at Union, receiving a Master of Philosophy (M.Phil.) degree and a Doctor of Philosophy (Ph.D.) degree in the field of systematic theology.[2]

Activism

Rev. Warnock has a long history of backing leftist cause celebres and legal cases:

- As a pastor, Rev. Warnock sees the whole community as his parish. Accordingly, he has defended voting rights in his own state of Georgia. And when, in 2006, the State of Louisiana failed to protect the voting rights of recent Katrina evacuees, he led a “Freedom Caravan” of citizens back to New Orleans to vote. Dr. Warnock has addressed his ministry to urban men through a barbershop ministry called “Cutting Thru Crisis” and through a series of Bible Studies held in a local car wash. Newsweek magazine covered this example of his unconventional approach to ministry in an article entitled, “For Those Who’ve Fallen, Salvation Amid the Suds.” Dr. Warnock has taken on the contradictions in our criminal justice system through his preaching and through his fierce public advocacy. Along with many others, he stood up for Troy Davis, Trayvon Martin and Genarlow Wilson, a high school student ensnared by a poorly written law and sentenced to 10 years in prison. Wilson was released on appeal and has since graduated from Morehouse College. His bold and visionary leadership has been further demonstrated through his public policy work with The National Black Leadership Commission on AIDS and his ongoing efforts to provide tuition support for young people pursuing post-secondary education. Dr. Warnock is a graduate of the Leadership Program sponsored by the Greater Baltimore Committee, a graduate of the Summer Leadership Institute of Harvard University and a graduate of Leadership Atlanta.

- He is a member of Alpha Phi Alpha Fraternity, Inc., the 100 Black Men of Atlanta, Inc. and a Lifetime Member of the NAACP.[3]

Honors

Rev. Warnock’s activism was honored in 2016, as his footprints were placed on the International Civil Rights Walk of Fame. Among other honors, Rev. Warnock has been recognized as one of “Atlanta’s 55 Most Powerful” by Atlanta magazine, one of the “New Kingdom Voices” by Gospel Today magazine, one of “God’s Trombones” by the Rainbow Push Coalition, a “Good Shepherd” by Associated Black Charities, one of the “Chosen Pastors” by The Gospel Choice Awards, “A Man of Influence” by the Atlanta Business League, one of The Root 100 in 2010, 2011, 2012 and 2013 (TheRoot.com a division of the Washington Post), one of the “Top 10 Most Influential Black Ministers” by Loop 21, one of the “20 Top African American Church Leaders” by TheRoot.com and he has received the Reverend Dr. William A. Jones Justice Award from the National Action Network. He is a National TRIO Achiever Award recipient and has been honored by induction into the Martin Luther King, Jr. Board of Preachers.[4]

Raphael Warnock Pastor during Fidel Castro Visit

In an article headlined "Georgia Senate candidate Warnock was assistant pastor of church that hosted, praised Fidel Castro in 1995,"[5] it was revealed that Raphael Warnock was a pastor at the Abyssinian Baptist Church when Cuban Marxist dictator Fidel Castro visited in 1995. Raphael Warnock's campaign spokesman distanced the Senate candidate from the Marxist dictator.

- “Twenty-five years ago, Reverend Warnak was a youth pastor and was not involved in any decisions at the time,” said campaign spokesman Terence Clarke. Warnock declined the campaign to provide further comment on whether or not he attended that particular event.

The article revealed that "In C-span footage At the event, Head Pastor Calvin Butts praised Castro, saying, “Alluding to Fidel’s mantras!” Fidel! Fidel!" Further, it was explained that Calvin Butts "defended the decision to invite Castro, arguing that 'our tradition is to welcome those who are visionaries, those who are revolutionaries, and those who want the freedom of all people around the world.'

The scene was described in a 1995 New York Times article:[6]

- "Early in the evening, about 1,300 people sat shoulder to shoulder in the pews of the Abyssinian Baptist Church, waiting for Mr. Castro. A diverse crowd, including whites, blacks and Hispanic people, had spent hours on line for the ticketed event. Many said they were there to show support for Mr. Castro's efforts to derail the Helms-Burton bill in Congress, which seeks to tighten the economic embargo. Many blacks, in particular, said they admired him because they believed he had created harmony between the races in Cuba.

- "I was here the last time when he came to the Theresa Hotel" in Harlem, said David Brothers, 76. "I felt I had to come again. He's a principled man and he doesn't bow down. He did a whole lot for Africans."

- Asked whether he expected to see a firebrand mellowed with age, Mr. Brothers said: "He's still the revolutionary. The suit don't make the man."

- Mr. Castro entered the church at about 7:45 to roars of "Fidel! Fidel! Fidel!" The crowd chanted, "Cuba, si, bloqueo, no." Mr. Castro strode to the podium, relishing the boisterous show of support, which lasted for 10 minutes.

- He smiled and nodded at the politicans [sic] and leaders seated in the balcony above, among them Representative Charles Rangel, Representative Nydia Velasquez, Representative Jose Serrano and Angela Davis, the 1970's black radical who is now a professor of philosophy at the University of California at Santa Cruz. Ms. Davis smiled at him and gave him a fisted salute."

Butts speaks at Warnock's church

Raphael Warnock Mar 19, 2011.

The Rev. Calvin O. Butts III of New York's The Abyssinian Baptist Church will be the speaker at Ebenezer Baptist Church.

The Rev. Calvin O. Butts III of New York's The Abyssinian Baptist Church spoke at Ebenezer Baptist Church as it celebrated its 125th anniversary in August 2012.

Rep. John Lewis , D-Atlanta , who recently received the Presidential Medal Of Freedom, will receive a special honor for his dedication racial equality. Also being honored is state Sen. Leroy Johnson, who is chairman of the church's board of trustees.

During the service, the Rev. Raphael Warnock, the church's senior pastor, will unveil a work by Donald Bermudez commemorating Ebenezer's history.[7]

Praise from Calvin Butts

A September, 2001 article from the Baltimore Sun Raphael Warnock addresses Raphael Warnock's new position as pastor of Douglas Memorial Community Church in West Baltimore, as well as his participation "in a daylong symposium: "The Black Church's Response to the HIV/AIDS Epidemic."[8]

From the article:

- The Rev. Calvin Butts, pastor of Abyssinian, said he wasn't surprised Warnock was hired.

- "He's one of the brightest and most intelligent and academically prepared young clergymen in the country," Butts said. "He got along excellently with the church members of all ages. He's a forceful leader, very serious about the issues that impact especially the African-American community. He's one of the more thoughtful preachers of his generation."

New Georgia Project

Rev. Raphael Warnock is chair of the New Georgia Project

Fair Fight endorsement

According to Lauren Groh-Wargo

- Last month, Fair Fight and Stacey Abrams did something for the first time and something we likely won’t do again — endorse in a contested Democratic U.S. Senate race. On January 30, Reverend Raphael Warnock launched his campaign for U.S. Senate. Fair Fight and Stacey endorsed his run soon thereafter, and Fair Fight is making in-kind contributions close to the maximum limit to support his run.

- I wanted to share some background on why Rev. Warnock is so special and important to me personally, and to our work overall. Rev. Warnock and Stacey have had a long relationship and have worked together on many important issues through the years. The three of us worked closely together in 2014 when we first launched the New Georgia Project, an organization founded to register an electorate that was increasingly younger and more diverse. SOS Brian Kemp launched a suppressive, evil investigation into the New Georgia Project that year, trying to shut down the largest voter registration drive the state had seen in decades, charging us with voter registration fraud. Ultimately, we were vindicated, and no wrongdoing was found. But those months in many ways are the genesis of where we are today.

- I met Stacey’s longtime friend and former colleague Allegra Lawrence-Hardy, and was connected with Dara Lindenbaum — both who came on that year as legal counsel in our fight against the investigation, and then in the Writ of Mandamus we filed against Kemp — as we had unknowingly uncovered at the time the “exact match” law designed to keep people off voter rolls. 40,000 of the 86,000 forms NGP submitted that year weren’t showing up on the rolls, and so the Mandamus action was meant to force him to register those voters. Though that suit was unsuccessful, the issue evolved into another lawsuit filed by the Lawyers Committee For Civil Rights Under Law, and that lawsuit resulted in 30,000 voters being reinstated to the rolls who had been flagged in 2014 and cancelled out of the system.

- When Kemp launched his investigation that year and the subpoena arrived and the fight quickly escalated, Stacey and I reached out to Rev. Warnock. He jumped in and worked side by side with us to fight back against Kemp’s attempt to shut down and criminalize voter registration. For Rev. Warnock’s kindness and fearlessness in that fight, I will be forever grateful. It was a very difficult time as we expected at any moment our canvassing offices to be raided, and we had to periodically shut the operation down because we were worried about our canvassers being harassed or arrested. Stacey stepped down from her role as Chair of NGP when she filed for the gubernatorial race in 2017, and Rev. Warnock stepped up as the new Chair of the organization. Now Francys Johnson, another fighter for voting rights and the former State President of the GA NAACP, has become the Chair of NGP.

- Rev. Warnock is the senior pastor at Ebenezer Baptist Church, one of our co-plaintiffs in our nonpartisan Fair Fight Action litigation, and he with his church have fought alongside us to get relief from the courts for Georgia voters.

- Rev. Warnock is also a leader on numerous progressive issues, including gun safety, criminal justice reform, and civil and human rights. It is an honor to call him a friend, and I could not be happier for the Georgians we fight for every day that he is stepping up to run for US Senate. His run has the chance to transform the conversation about the importance of Georgia in the 2020 election.

- I hope some of this lends some background as to why Fair Fight’s PAC is taking unprecedented steps to support his candidacy — and why we think you should join us! Please consider making a contribution to Rev. Warnock and help him become the next U.S. Senator from Georgia.[9]

Influence

As an opinion leader, his perspective has been sought out by electronic and print media, locally, nationally and internationally. His work has been featured on CNN, the CBS Evening News, the Huffington Post and in the Atlanta Journal and Constitution which hailed him “a leader among Atlanta – and national – clergy, a fitting heir to the mantle once worn by The Reverend Dr. Martin Luther King, Jr.” At President Barack and First Lady Michelle Obama’s request, Dr. Warnock delivered the closing prayer at the 2013 Inaugural Prayer Service held at the National Cathedral and delivered the sermon for the Annual White House Prayer breakfast in March 2016.

Rev. Warnock has preached his message of salvation and liberation in such places as The Riverside Church of New York and the International Festival of Homiletics. But he is just as comfortable in a small, country church or an urban storefront. His first book is entitled, The Divided Mind of the Black Church; Theology, Piety & Public Witness (NYU Press, 2014).[10]

SONG connection

June 2018 Ebenezer Baptist church and partners raised money to bail out folks out of jail next week — in time for Father’s Day and Juneteenth. For the church, the bailout was part of a larger focus on mass incarceration.

“We who believe in freedom cannot rest until we dismantle mass incarceration,” said Rev. Raphael Warnock from his pulpit at Ebenezer Baptist Church on Wednesday night, kicking off the Freedom Day Bailout Campaign.

“Part of how that happens is we criminalize poor people. We have effectively made being poor in America a crime,” he said.

He and other critics nationwide want to change how the criminal justice system treats people who don’t have the cash to pay bail or fines or fees.

For the same charges, it’s a different outcome for a person who has $1,000 to pay bail and someone who doesn’t have that money. Without money, people wait for trial in jail.

Or when someone can’t pay a fine, more, unaffordable trouble piles up.

Several times, Warnock referred to the Ferguson report, the scathing product of a federal investigation of the Ferguson, Missouri police. The report documented systemic racism among officers, a pattern of excessive force and other violations of law, plus the effect of fees and fines on folks who can’t pay them.

“As we saw with Ferguson report, poor people get caught up in the system, with fees and fines and then they end up in jail. If they have employment, they end up losing it, if they have children, their children end up in trouble. So this is a serious moral issue,” said Warnock.

Warnock said they hope to bail out a couple dozen people — and that there are similar campaigns going on across the country to address the cash bail system.

In the meantime, campaigners are also asking prosecutors to refrain from demands for cash bail for the vast majority of offenses, said Tiffany Roberts, chair of the Ebenezer Baptist Church Social Justice Ministry.

The new city rule, which had Ebenezer’s support, eliminates bail for some cases that come up in Atlanta’s Municipal Court.

And a new state law requires judges to consider a person’s ability to pay bail on misdemeanors before setting it.

High-profile bailouts in opposition to mass incarceration have already been happening across the country, including national events like Black Mamas Bail Out, set up in time for Mother’s Day. In Atlanta, one of the organizers was Southerners On New Ground, which is also a partner in this campaign, among many other organizations.

Warnock said the church’s ongoing work on ending mass incarceration has included helping people restrict arrest records from public view. That can be done if the arrest didn’t result in a conviction. Those arrest records can prevent people from getting jobs or apartments, Warnock said, even if the person was never convicted of anything.

He also said that in the spring, the church is hosting an interfaith conference on mass incarceration.[11]

Nan Orrock connection

Raphael Warnock is close to Nan Orrock.

Renitta Shannon connection

State Representative Renitta Shannon February 8 2020.

Raphael Warnock is not “new to this” he’s “true to this”. I remember organizing with him in 2014 through #MoralMondays Georgia, to conduct civil disobedience at the Georgia Capitol to demand lawmakers #ExpandMedicaid in Georgia. He was 1 of 39 arrested fighting for healthcare for Georgia’s most vulnerable. I trust him to take that same fight to the US senate. I could not be more excited to stand with him. Let’s get him there 👇🏾

MMGA

In Georgia, organizers from Occupy our Homes Atlanta, DSA, and other groups that had worked together began talking about a Moral Monday Georgia (MMGA) coalition in August of 2013. The new state NAACP president, Rev. Francys Johnson, led the first rally, for Medicaid expansion, which attracted 500 people and significant media coverage. He also brought Rev. Barber to speak in Atlanta that day.

A core group of 100-200 activists from 40 Georgia organizations, including DSA, held weekly rallies on a variety of issues drawn from a 12-point platform. On three of the rally days, groups sat in at the Capitol and legislative offices, resulting in 73 arrests of 61 people, 10 DSA members among them. During the last week of the session in mid-March, MMGA—joined by Rev. Dr. Raphael Warnock of Martin Luther King’s Ebenezer Baptist Church—drew national and international media attention. This summer, groups of arrestees participated in a 16-city “Jailed for Justice” tour of the state, hoping to spread MMGA outside of Atlanta.[12]

An Open Letter to Governor Nathan Deal

An Open Letter to Governor Nathan Deal from Moral Monday GA By Moral Monday Georgia, April 30, 2014

As of last night, at the stroke of midnight, the clock of human progress turned back decades. You have caused unfair, unjust and harmful consequences for regular everyday Georgians with the passage of HB 990, HB 772, HB 714 and SB 98.

Sadly, your inaction has and will continue to cost real lives and hardships for Georgians who are already struggling. You have chosen politics over principle, a short term view of narrow self-interest over a long term vision of what's actually best for Georgia, making public policy turns that further marginalize our most vulnerable citizens while also crippling the state's prospects for economic recovery and prosperity...

- Reverend Raphael Warnock, Historic Ebenezer Baptist Church

- Dr Francys Johnson, NAACP Georgia President

- Jackie Rodriguez, Georgia Now President

- Tim Franzen, American Friends Service Committee

- La’die Mansfield, Global Organizing Institute

- Dianne Mathiowetz, Georgia Peace and Justice Coalition

- Neil Sardana, Atlanta Jobs with Justice[13]

MMGA Arrests

Nearly 40 Moral Monday Georgia activists were arrested Tuesday March 18, 2014, for interrupting proceedings throughout the Georgia Capitol in an effort to urge Gov. Nathan Deal to expand Medicaid - and block legislation that would strip him of the authority to do so.

16 Arrests - Senate Gallery: Joe Beasley, 77 Edward Loring, 74, Gary Kennedy, 51, Richard Miles Rustay, 84, Marquerite Casey, 65, Shawn Adelman, 32, female, Lorraine Fontana, 66, Minnie Ruffin, 72, Gladys B. Rustay, 81, Emma Stitt, 23, Morgan Swann, 62, female, Emma French, 22, John Slaughter, 74, Ray Miklethun, 79, Gregory Ames, 65, Robert Goodman, 73.

12 Arrests - at Governor's Office: Shanan Eugene Jones, 39 George F. Watson, Jr., 65, Francys Johnson, Jr., 34, male, Jeffrey Blair Benoit, 55, John Evans, 81, Raphael Warnock, 44, Karen Elaine Reagle, 71, Katherine Acker, 61, George Johnson, 42, Ronald Allen, 38, Fred Douglas Taylor, 71, Donald Bender, 73.

11 Arrests - Outside Senate Doors: Kevin Arthur Morgan, 66, Emilia Sigrid Kaiser, 26, female, Daniel Sean Hanley, 32, Sara Katherine Gregory, 31, Dawn Gibson, 39, Corey A. Hardiman, 22, male, Fred Albert, 67, Jacqueline Rodriquez, 31, Michael Schumm, 51, Neil Yukt Sardana, 32 Misty Novitch, 27.[14]

Saturday, March 02, 2019

You Will Lose Your Job to a Robot—and Sooner Than You Think

I don’t care what your job is. If you dig ditches, a robot will dig them better. If you’re a magazine writer, a robot will write your articles better. If you’re a doctor, IBM’s Watson will no longer “assist” you in finding the right diagnosis from its database of millions of case studies and journal articles. It will just be a better doctor than you.

And CEOs? Sorry. Robots will run companies better than you do. Artistic types? Robots will paint and write and sculpt better than you. Think you have social skills that no robot can match? Yes, they can. Within 20 years, maybe half of you will be out of jobs. A couple of decades after that, most of the rest of you will be out of jobs.

In one sense, this all sounds great. Let the robots have the damn jobs! No more dragging yourself out of bed at 6 a.m. or spending long days on your feet. We’ll be free to read or write poetry or play video games or whatever we want to do. And a century from now, this is most likely how things will turn out. Humanity will enter a golden age.

But what about 20 years from now? Or 30? We won’t all be out of jobs by then, but a lot of us will—and it will be no golden age. Until we figure out how to fairly distribute the fruits of robot labor, it will be an era of mass joblessness and mass poverty. Working-class job losses played a big role in the 2016 election, and if we don’t want a long succession of demagogues blustering their way into office because machines are taking away people’s livelihoods, this needs to change, and fast. Along with global warming, the transition to a workless future is the biggest challenge by far that progressive politics—not to mention all of humanity—faces. And yet it’s barely on our radar.

We Already Have a Solution for the Robot Apocalypse. It’s 200 Years Old.

But if Google’s translation algorithm was better, did that mean its voice recognition was better too? And its ability to answer queries? Hmm. How could we test that? We decided to open presents instead of cogitating over this.

But after that was over, the subject of erasers somehow came up. Which ones are best? Clear? Black? Traditional pink? Come to think of it, why are erasers traditionally pink? “I’ll ask Google!” I told everyone. So I pulled out my phone and said, “Why are erasers pink?” Half a second later, Google told me.

(In case you’re curious, Google got the answer from Design*Sponge: “The eraser was originally produced by the Eberhard Faber Company…The erasers featured pumice, a volcanic ash from Italy that gave them their abrasive quality, along with their distinctive color and smell.”)

Still not impressed? When Watson famously won a round of Jeopardy! against the two best human players of all time, it needed a computer the size of a bedroom to answer questions like this. That was only seven years ago.

What do pink erasers have to do with the fact that we’re all going to be out of a job in a few decades? Consider: Last October, an Uber trucking subsidiary named Otto delivered 2,000 cases of Budweiser 120 miles from Fort Collins, Colorado, to Colorado Springs—without a driver at the wheel. Within a few years, this technology will go from prototype to full production, and that means millions of truck drivers will be out of a job.

Automated trucking doesn’t rely on newfangled machines, like the powered looms and steam shovels that drove the Industrial Revolution of the 19th century. Instead, like Google’s ability to recognize spoken words and answer questions, self-driving trucks—and cars and buses and ships—rely primarily on software that mimics human intelligence. By now everyone’s heard the predictions that self-driving cars could lead to 5 million jobs being lost, but few people understand that once artificial-intelligence software is good enough to drive a car, it will be good enough to do a lot of other things too. It won’t be millions of people out of work; it will be tens of millions.

This is what we mean when we talk about “robots.” We’re talking about cognitive abilities, not the fact that they’re made of metal instead of flesh and powered by electricity instead of chicken nuggets.

In other words, the advances to focus on aren’t those in robotic engineering—though they are happening, too—but the way we’re hurtling toward artificial intelligence, or AI. While we’re nowhere near human-level AI yet, the progress of the past couple of decades has been stunning. After many years of nothing much happening, suddenly robots can play chess better than the best grandmaster. They can play Jeopardy! better than the best humans. They can drive cars around San Francisco—and they’re getting better at it every year. They can recognize faces well enough that Welsh police recently made the first-ever arrest in the United Kingdom using facial recognition software. After years of plodding progress in voice recognition, Google announced earlier this year that it had reduced its word error rate from 8.5 percent to 4.9 percent in 10 months.

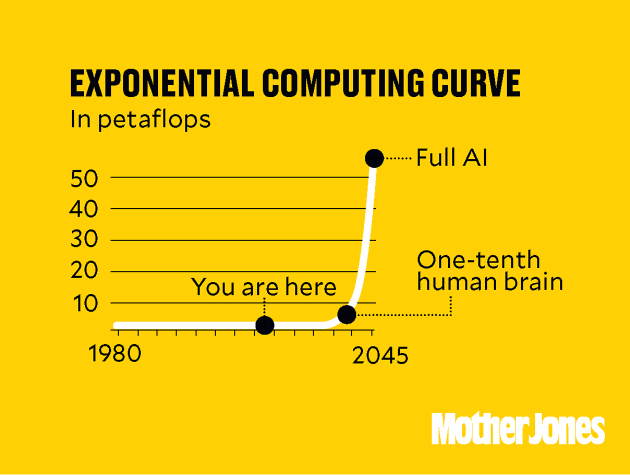

All of this is a sign that AI is improving exponentially, a product of both better computer hardware and software. Hardware has historically followed a growth curve called Moore’s law, in which power and efficiency double every couple of years, and recent improvements in software algorithms have been even more explosive. For a long time, these advances didn’t seem very impressive: Going from the brainpower of a bacterium to the brainpower of a nematode might technically represent an enormous leap, but on a practical level it doesn’t get us that much closer to true artificial intelligence. However, if you keep up the doubling for a while, eventually one of those doubling cycles takes you from the brainpower of a lizard (who cares?) to the brainpower of a mouse and then a monkey (wow!). Once that happens, human-level AI is just a short step away.

Are we really this close to true AI? Here’s a yardstick to think about. Even with all this doubling going on, until recently computer scientists thought we were still years away from machines being able to win at the ancient game of Go, usually regarded as the most complex human game in existence. But last year, a computer beat a Korean grandmaster considered one of the best of all time, and earlier this year it beat the highest-ranked Go player in the world. Far from slowing down, progress in artificial intelligence is now outstripping even the wildest hopes of the most dedicated AI cheerleaders. Unfortunately, for those of us worried about robots taking away our jobs, these advances mean that mass unemployment is a lot closer than we feared—so close, in fact, that it may be starting already. But you’d never know that from the virtual silence about solutions in policy and political circles.

I’m hardly alone in thinking we’re on the verge of an AI Revolution. Many who work in the software industry—people like Bill Gates and Elon Musk—have been sounding the alarm for years. But their concerns are largely ignored by policymakers and, until recently, often ridiculed by writers tasked with interpreting technology or economics. So let’s take a look at some of the most common doubts of the AI skeptics.

#1: We’ll never get true AI because computing power won’t keep doubling forever. We’re going to hit the limits of physics before long. There are several pretty good reasons to dismiss this claim as a roadblock. To start, hardware designers will invent faster, more specialized chips. Google, for example, announced last spring that it had created a microchip called a Tensor Processing Unit, which it claimed was up to 30 times faster and 80 times more power efficient than an Intel processor for machine learning tasks. A huge array of those chips are now available to researchers who use Google’s cloud services. Other chips specialized for specific aspects of AI (image recognition, neural networking, language processing, etc.) either exist already or are certain to follow.

What’s more, this raw power is increasingly being harnessed in a manner similar to the way the human brain works. Your brain is not a single, superpowerful computing device. It’s made up of about 100 billion neurons working in parallel—i.e., all at the same time—to create human-level intelligence and consciousness. At the lowest level, neurons operate in parallel to create small clusters that perform semi-independent actions like responding to a specific environmental cue. At the next level, dozens of these clusters work together in each of about 100 “sub-brains”—distinct organs within the brain that perform specialized jobs such as speech, visual processing, and balance. Finally, all these sub-brains operate in parallel, and the resulting overall state is monitored and managed by executive functions that make sense of the world and provide us with our feeling that we have conscious control of our actions.

This doesn’t mean AI is here already. Far from it. This “massively parallel” architecture still presents enormous programming challenges, but as we get better at exploiting it we’re certain to make frequent breakthroughs in software performance. In other words, even if Moore’s law slows down or stops, the total power of everything put together—more use of custom microchips, more parallelism, more sophisticated software, and even the possibility of entirely new ways of doing computing—will almost certainly keep growing for many more years.

#2: Even if computing power keeps doubling, it has already been doubling for decades. You guys keep predicting full-on AI, but it never happens. It’s true that during the early years of computing there was a lot of naive optimism about how quickly we’d be able to build intelligent machines. But those rosy predictions died in the ’70s, as computer scientists came to realize that even the fastest mainframes of the day produced only about a billionth of the processing power of the human brain. It was a humbling realization, and the entire field has been almost painfully realistic about its progress ever since.

We’ve finally built computers with roughly the raw processing power of the human brain—although only at a cost of more than $100 million and with an internal architecture that may or may not work well for emulating the human mind. But in another 10 years, this level of power will likely be available for less than $1 million, and thousands of teams will be testing AI software on a platform that’s actually capable of competing with humans.

#3: Okay, maybe we will get full AI. But it only means that robots will act intelligent, not that they’ll really be intelligent. This is just a tedious philosophical debating point. For the purposes of employment, we don’t really care if a smart computer has a soul—or if it can feel love and pain and loyalty. We only care if it can act like a human being well enough to do anything we can do. When that day comes, we’ll all be out of jobs even if the computers taking our places aren’t “really” intelligent.

#4: Fine. But waves of automation—steam engines, electricity, computers—always lead to predictions of mass unemployment. Instead they just make us more efficient. The AI Revolution will be no different. This is a popular argument. It’s also catastrophically wrong.

The Industrial Revolution was all about mechanical power: Trains were more powerful than horses, and mechanical looms were more efficient than human muscle. At first, this did put people out of work: Those loom-smashing weavers in Yorkshire—the original Luddites—really did lose their livelihoods. This caused massive social upheaval for decades until the entire economy adapted to the machine age. When that finally happened, there were as many jobs tending the new machines as there used to be doing manual labor. The eventual result was a huge increase in productivity: A single person could churn out a lot more cloth than she could before. In the end, not only were as many people still employed, but they were employed at jobs tending machines that produced vastly more wealth than anyone had thought possible 100 years before. Once labor unions began demanding a piece of this pie, everyone benefited.

The AI Revolution will be nothing like that. When robots become as smart and capable as human beings, there will be nothing left for people to do because machines will be both stronger and smarter than humans. Even if AI creates lots of new jobs, it’s of no consequence. No matter what job you name, robots will be able to do it. They will manufacture themselves, program themselves, repair themselves, and manage themselves. If you don’t appreciate this, then you don’t appreciate what’s barreling toward us.

In fact, it’s even worse. In addition to doing our jobs at least as well as we do them, intelligent robots will be cheaper, faster, and far more reliable than humans. And they can work 168 hours a week, not just 40. No capitalist in her right mind would continue to employ humans. They’re expensive, they show up late, they complain whenever something changes, and they spend half their time gossiping. Let’s face it: We humans make lousy laborers.

If you want to look at this through a utopian lens, the AI Revolution has the potential to free humanity forever from drudgery. In the best-case scenario, a combination of intelligent robots and green energy will provide everyone on Earth with everything they need. But just as the Industrial Revolution caused a lot of short-term pain, so will intelligent robots. While we’re on the road to our Star Trek future, but before we finally get there, the rich are going to get richer—because they own the robots—and the rest of us are going to get poorer because we’ll be out of jobs. Unless we figure out what we’re going to do about that, the misery of workers over the next few decades will be far worse than anything the Industrial Revolution produced.

Wait, wait, skeptics will say: If all this is happening as we speak, why aren’t people losing their jobs already? Several sharp observers have made this point, including James Surowiecki in a recent issue of Wired. “If automation were, in fact, transforming the US economy,” he wrote, “two things would be true: Aggregate productivity would be rising sharply, and jobs would be harder to come by than in the past.” But neither is happening. Productivity has actually stalled since 2000 and jobs have gotten steadily more plentiful ever since the Great Recession ended. Surowiecki also points out that job churn is low, average job tenure hasn’t changed much in decades, and wages are rising—though he admits that wage increases are “meager by historical standards.”

True enough. But as I wrote four years ago, since 2000 the share of the population that’s employed has decreased; middle-class wages have flattened; corporations have stockpiled more cash and invested less in new products and new factories; and as a result of all this, labor’s share of national income has declined. All those trends are consistent with job losses to old-school automation, and as automation evolves into AI, they are likely to accelerate.

That said, the evidence that AI is currently affecting jobs is hard to assess, for one big and obvious reason: We don’t have AI yet, so of course we’re not losing jobs to it. For now, we’re seeing only a few glimmers of smarter automation, but nothing even close to true AI.

Remember that artificial intelligence progresses in exponential time. This means that even as computer power doubles from a trillionth of a human brain’s power to a billionth and then a millionth, it has little effect on the level of employment. Then, in the relative blink of an eye, the final few doublings take place and robots go from having a thousandth of human brainpower to full human-level intelligence. Don’t get fooled by the fact that nothing much has happened yet. In another 10 years or so, it will.

So let’s talk about which jobs are in danger first. Economists generally break employment into cognitive versus physical jobs and routine versus nonroutine jobs. This gives us four basic categories of work:

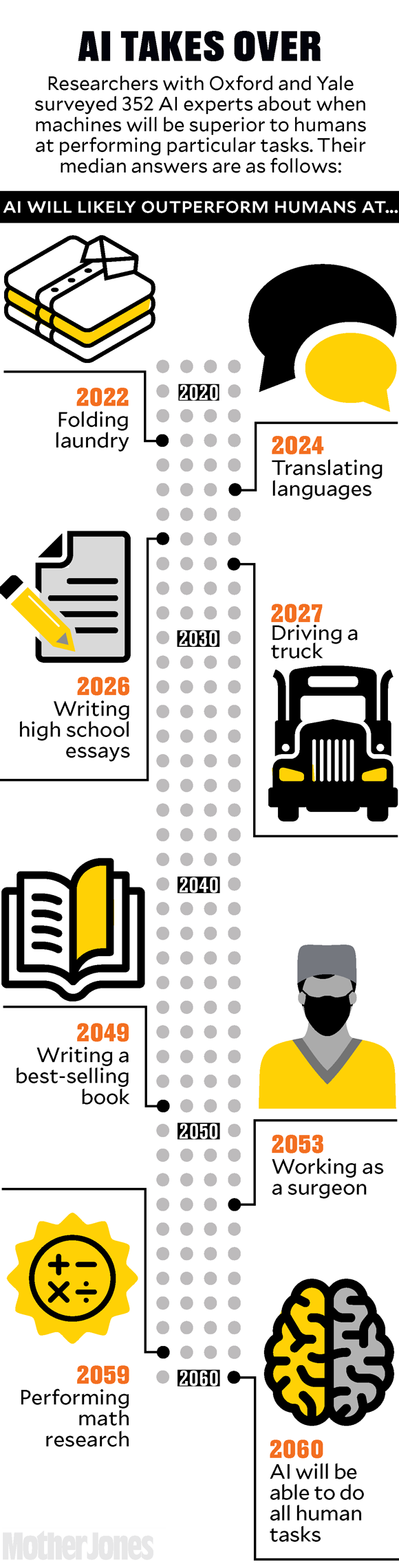

But we don’t need full AI for everything. The machine-learning researchers estimate that speech transcribers, translators, commercial drivers, retail sales, and similar jobs could be fully automated during the 2020s. Within a decade after that, all routine jobs could be gone.

2060 seems a long way off, but if the Oxford-Yale survey is right, we’ll face an employment apocalypse far sooner than that: the disappearance of routine work of all kinds by the mid-2030s. That represents nearly half the US labor force. The consulting firm PricewaterhouseCoopers recently released a study saying much the same. It predicts that 38 percent of all jobs in the United States are “at high risk of automation” by the early 2030s, most of them in routine occupations. In the even nearer term, the World Economic Forum predicts that the rich world will lose 5 million jobs to robots by 2020, while a group of AI experts, writing in Scientific American, figures that 40 percent of the 500 biggest companies will vanish within a decade.

Not scared yet? Kai-Fu Lee, a former Microsoft and Google executive who is now a prominent investor in Chinese AI startups, thinks artificial intelligence “will probably replace 50 percent of human jobs.” When? Within 10 years. Ten years! Maybe it’s time to really start thinking hard about AI.

And forget about putting the genie back in the bottle. AI is coming whether we like it or not. The rewards are just too great. Even if America did somehow stop AI research, it would only mean that the Chinese or the French or the Brazilians would get there first. Russian President Vladimir Putin agrees. “Artificial intelligence is the future, not only for Russia but for all humankind,” he announced in September. “Whoever becomes the leader in this sphere will become the ruler of the world.” There’s just no way around it: For the vast majority of jobs, work as we know it will come steadily to an end between about 2025 and 2060.

So who benefits? The answer is obvious: the owners of capital, who will control most of the robots. Who suffers? That’s obvious too: the rest of us, who currently trade work for money. No work means no money.

But things won’t actually be quite that grim. After all, fully automated farms and factories will produce much cheaper goods, and competition will then force down prices. Basic material comfort will be cheap as dirt.

One way or another, then, the answer to the mass unemployment of the AI Revolution has to involve some kind of sweeping redistribution of income that decouples it from work. Or a total rethinking of what “work” is. Or a total rethinking of what wealth is. Let’s consider a few of the possibilities.

The welfare state writ large: This is the simplest to think about. It’s basically what we have now, but more extensive. Unemployment insurance will be more generous and come with no time limits. National health care will be free for all. Anyone without a job will qualify for some basic amount of food and housing. Higher taxes will pay for it, but we’ll still operate under the assumption that gainful employment is expected from anyone able to work.

This is essentially the “bury our heads in the sand” option. We refuse to accept that work is truly going away, so we continue to punish people who aren’t employed. Jobless benefits remain stingy so that people are motivated to find work—even though there aren’t enough jobs to go around. We continue to believe that eventually the economy will find a new equilibrium.

This can’t last for too long, and millions will suffer during the years we continue to delude ourselves. But it will protect the rich for a while.

Universal basic income #1: This is a step further down the road. Everyone would qualify for a certain level of income from the state, but the level of guaranteed income would be fairly modest because we would still want people to work. Unemployment wouldn’t be as stigmatized as it is in today’s welfare state, but neither would widespread joblessness be truly accepted as a permanent fact of life. Some European countries are moving toward a welfare state with cash assistance for everyone.

Universal basic income #2: This is UBI on steroids. It’s available to everyone, and the income level is substantial enough to provide a satisfying standard of living. This is what we’ll most likely get once we accept that mass unemployment isn’t a sign of lazy workers and social decay, but the inevitable result of improving technology. Since there’s no personal stigma attached to joblessness and no special reason that the rich should reap all the rewards of artificial intelligence, there’s also no reason to keep the universal income level low. After all, we aren’t trying to prod people back into the workforce. In fact, the time will probably come when we actively want to do just the opposite: provide an income large enough to motivate people to leave the workforce and let robots do the job better.

Silicon Valley—perhaps unsurprisingly—is fast becoming a hotbed of UBI enthusiasm. Tech executives understand what’s coming, and that their own businesses risk a backlash unless we take care of its victims. Uber has shown an interest in UBI. Facebook CEO Mark Zuckerberg supports it. Ditto for Tesla CEO Elon Musk and Slack CEO Stewart Butterfield. A startup incubator called Y Combinator is running a pilot program to find out what happens if you give people a guaranteed income.

There are even some countries that are now trying it. Switzerland rejected a UBI proposal in 2016, but Finland is experimenting with a small-scale UBI that pays the unemployed about $700 per month even after they find work. UBI is also getting limited tryouts by cities in Italy and Canada. Right now these are all pilot projects aimed at learning more about how to best run a UBI program and how well it works. But as large-scale job losses from automation start to become real, we should expect the idea to spread rapidly.

A tax on robots: This is a notion raised by a draft report to the European Parliament and endorsed by Bill Gates, who suggests that robots should pay income tax and payroll tax just like human workers. That would keep humans more competitive. Unfortunately, there’s a flaw here: The end result would be to artificially increase the cost of employing robots, and thus the cost of the goods they produce. Unless every country creates a similar tax, it accomplishes nothing except to push robot labor overseas. We’d be worse off than if we simply let the robots take our jobs in the first place. Nonetheless, a robot tax could still have value as a way of modestly slowing down job losses. Economist Robert Shiller suggests that we should consider “at least modest robot taxes during the transition to a different world of work.” And where would the money go? “Revenue could be targeted toward wage insurance,” he says. In other words, a UBI.

Socialization of the robot workforce: In this scenario, which would require a radical change in the US political climate, private ownership of intelligent robots would be forbidden. The market economy we have today would continue to exist with one exception: The government would own all intelligent robots and would auction off their services to private industry. The proceeds would be divided among everybody.

Progressive taxation on a grand scale: Let the robots take all the jobs, but tax all income at a flat 90 percent. The rich would still have an incentive to run businesses and earn more money, but for the most part labor would be considered a societal good, like infrastructure, not the product of individual initiative.

Wealth tax: Intelligent robots will be able to manufacture material goods and services cheaply, but there will still be scarcity. No matter how many robots you have, there’s only so much beachfront property in Southern California. There are only so many original Rembrandts. There are only so many penthouse suites. These kinds of things will be the only real wealth left, and the rich will still want them. So if robots make the rich even richer, they’ll bid up the price of these luxuries commensurately, and all that’s left is to tax them at high rates. The rich still get their toys, while the rest of us get everything we want except for a view of the sun setting over the Pacific Ocean.

A hundred years from now, all of this will be moot. Society will adapt in ways we can’t foresee, and we’ll all be far wealthier, safer, and more comfortable than we are today—assuming, of course, that the robots don’t kill us all, Skynet fashion.

But someone needs to be thinking hard about how to prepare for what happens in the meantime. Not many are. Last year, for example, the Obama White House released a 48-page report called “Preparing for the Future of Artificial Intelligence.” That sounds promising. But it devoted less than one page to economic impacts and concluded only that “policy questions raised by AI-driven automation are important but they are best addressed by a separate White House working group.”

Regrettably, the coming jobocalypse has so far remained the prophecy of a few Cassandras: mostly futurists, academics, and tech executives. For example, Eric Schmidt, chairman of Google’s parent company, believes that AI is coming faster than we think, and that we should provide jobs to everyone during the transition. “The country’s goal should be full employment all the time, and do whatever it takes,” he says.

Another sharp thinker about our jobless future is Martin Ford, author of Rise of the Robots. Mass joblessness, he warns, isn’t limited to low-skill workers. Nor is it something we can fight by committing to better education. AI will decimate any job that’s “predictable”—which means nearly all of them. Many of us might not like to hear this, but Ford is unsentimental about the work we do. “Relatively few people,” he says, are paid “primarily to engage in truly creative work or ‘blue sky’ thinking.”

So how do we get these ideas into the political mainstream? One thing is certain: The monumental task of dealing with the AI Revolution will be almost entirely up to the political left. After all, when the automation of human labor begins in earnest, the big winners are initially going to be corporations and the rich. Because of this, conservatives will be motivated to see every labor displacement as a one-off event, just as they currently view every drought, every wildfire, and every hurricane as a one-off event. They refuse to see that global warming is behind changing weather patterns because dealing with climate change requires environmental regulations that are bad for business and bad for the rich. Likewise, dealing with an AI Revolution will require new ways of distributing wealth. In the long run this will be good even for the rich, but in the short term it’s a pretty scary prospect for those with money—and one they’ll fight zealously. Until they have no choice left, conservatives are simply not going to admit this is happening, let alone think about how to address it. It’s not in their DNA.

Other candidates are equally unlikely. The military thinks about automation all the time—but primarily as a means of killing people more efficiently, not as an economic threat. The business community is a slave to quarterly earnings and in any case will be too divided to be of much help. Labor unions have good reason to care, but by themselves they’re too weak nowadays to have the necessary clout with policymakers.

Nor are we likely to get much help from governments, which mostly don’t even understand what’s happening. Google’s Schmidt puts it bluntly. “The gap between the government, in terms of their understanding of software, let alone AI, is so large that it’s almost hopeless,” he said at a conference earlier this year. Certainly that’s true of the Trump administration. Asked about AI being a threat to jobs, Treasury Secretary Steven Mnuchin stunningly waved it off as a problem that’s still 50 or 100 years in the future. “I think we’re, like, so far away from that,” he said. “Not even on my radar screen.” This drew a sharp rebuke from former Treasury Secretary Larry Summers: “I do not understand how anyone could reach the conclusion that all the action with technology is half a century away,” he said. “Artificial intelligence is transforming everything from retailing to banking to the provision of medical care.”

So who’s left? Like it or not, the only real choice to sound the alarm outside the geek community is the Democratic Party, along with its associated constellation of labor unions, think tanks, and activists. Imperfect as it is—and its reliance on rich donors makes it conspicuously imperfect—it’s the only national organization that has both the principles and the size to do the job.

Unfortunately, political parties are inherently short-term thinkers. Democrats today are absorbed with fighting President Donald Trump, saving Obamacare, pushing for a $15 minimum wage—and arguing about all those things. They have no time to think hard about the end of work.

Nonetheless, somebody on the left with numbers, clout, power, and organizing energy—hopefully all the above—had better start. Conventional wisdom says Trump’s victory last year was tipped over the edge by a backlash among working-class voters in the Upper Midwest. When blue-collar workers start losing their jobs in large numbers, we’ll see a backlash that makes 2016 look like a gentle breeze. Either liberals start working on answers now, or we risk voters rallying around far more effective and dangerous demagogues than Trump.

Despite the amount of media attention that both robots and AI have gotten over the past few years, it’s difficult to get people to take them seriously. But start to pay attention and you see the signs: An Uber car can drive itself. A computer can write simple sports stories. SoftBank’s Pepper robot already works in more than 140 cellphone stores in Japan and is starting to get tryouts in America too. Alexa can order replacement Pop-Tarts before you know you need them. A Carnegie Mellon computer that seems to have figured out human bluffing beat four different online-poker pros earlier this year. California, suffering from a lack of Mexican workers, is ground zero for the development of robotic crop pickers. Sony is promising a robot that will form an emotional bond with its owner.

These are all harbingers, the way a dropping barometer signals a coming storm—not the possibility of a storm, but the inexorable reality. The two most important problems facing the human race right now are the need for widespread deployment of renewable energy and figuring out how to deal with the end of work. Everything else pales in comparison. Renewable energy already gets plenty of attention, even if half the country still denies that we really need it. It’s time for the end of work to start getting the same attention.